LandSwift

A B2B SaaS workflow platform streamlines submitting and reviewing landscape designs for new homes within community developments.

Role

Researcher,

Product Designer

Tools

Figma, Otter.ai, Dovetail, Zoom, Maze, ChatGPT, G-suite

Timeline

August - September 2023

Skills

User research, Synthesizing Research, Information Architecture, Customer Journey Mapping, Wireframing, Branding, Prototyping, User testing

The landscape design review process plays a critical role in community development construction, where landscape aesthetics, alongside architectural elements, help distinguish these communities

THE PROBLEM

Outdated design review technologies lead to inefficient workflows, impacting construction timelines, and home sales within community developments

The landscape design review process primarily relies on email, often resulting in inefficiencies due to the lack of centralized information. These inefficiencies not only burden builders and design reviewers with information disarray and communication issues, but also have downstream impacts on field-work coordination, construction timelines, and home sales.

THE SOLUTION

Streamline the process by integrating an AI assistant into a centralized workflow platform

Introducing LandSwift, a B2B SaaS workflow platform, employing an AI assistant named Olive to optimize the landscape design review process. This AI assistant, Olive, processes new landscape design submissions, sends reminders and status updates to all stakeholders, and automatically approves designs using AI photo recognition. In edge cases where approval isn't possible, the AI assistant will identify issues before passing the task to a design reviewer.

Research Phase

COMPETITOR ANALYSIS

Existing design review tools are basic electronic forms that favor design submitters over reviewers

I conducted a competitive analysis to understand how competitors facilitate landscape design reviews. Emphasis was placed on the available features, successes, and shortcomings, revealing opportunities for innovation.

Design review features on existing property management softwares are basic electronic forms with major limitations:

Tailored to users who submit designs rather than those responsible for reviewing them

Accessibility concerns due to color selections and font sizes

Outdated online form with poor visual hierarchy and too much text

Monday became one of my references for a user-friendly workflow management software:

Automatically creates to-do lists based on timeline and status of tasks

Module tabs allow you to access different content without leaving a page

Platform to email connection creates seamless communication and paper trails

However, the visual clutter and extensive dashboard customization impose a heavy mental load on the user.

USER INTERVIEWS

"There hasn't really been a good solution… It's always just been emails and its kind of been on me to track everything."

Through in-depth user interviews with both builders who submit landscape designs and design reviewers, I gained comprehensive insight into participants’ first-hand experiences with the design review process. These insights validated the data collected from the competitor analysis thus revealing a clear opportunity for innovation.

Key Findings

"All the stamping, the approving, putting the notes... to update the files afterward... That’s more of a process than actually looking at the document."

– Sarah H, Design Reviewer

INSIGHT: Administrative tasks take more time than reviewing a design.

"When it [the design submission] went to someone's email, I don't know if it got looked at, if it's being reviewed or no one is looking at it… I have no idea."

– Hala I, Builder

INSIGHT: There is no centralized location for communication, workflows, and files. Email has traditionally served as the industry-standard platform for these functions.

"The construction manager will send me an email and say, 'Hey, do we have the landscape approval'? And I'll send it to them at that point."

– Scott Y, Builder

INSIGHT: When a design is approved, there is no guarantee that it will make it to the construction managers in the field.

"What takes the longest is getting the landscape architect to review because this is a portion of their job. This isn't their primary job."

– Amy K, Design Reviewer

INSIGHT: Reviewing landscape designs is only a portion of someone's job, so it isn’t always a priority.

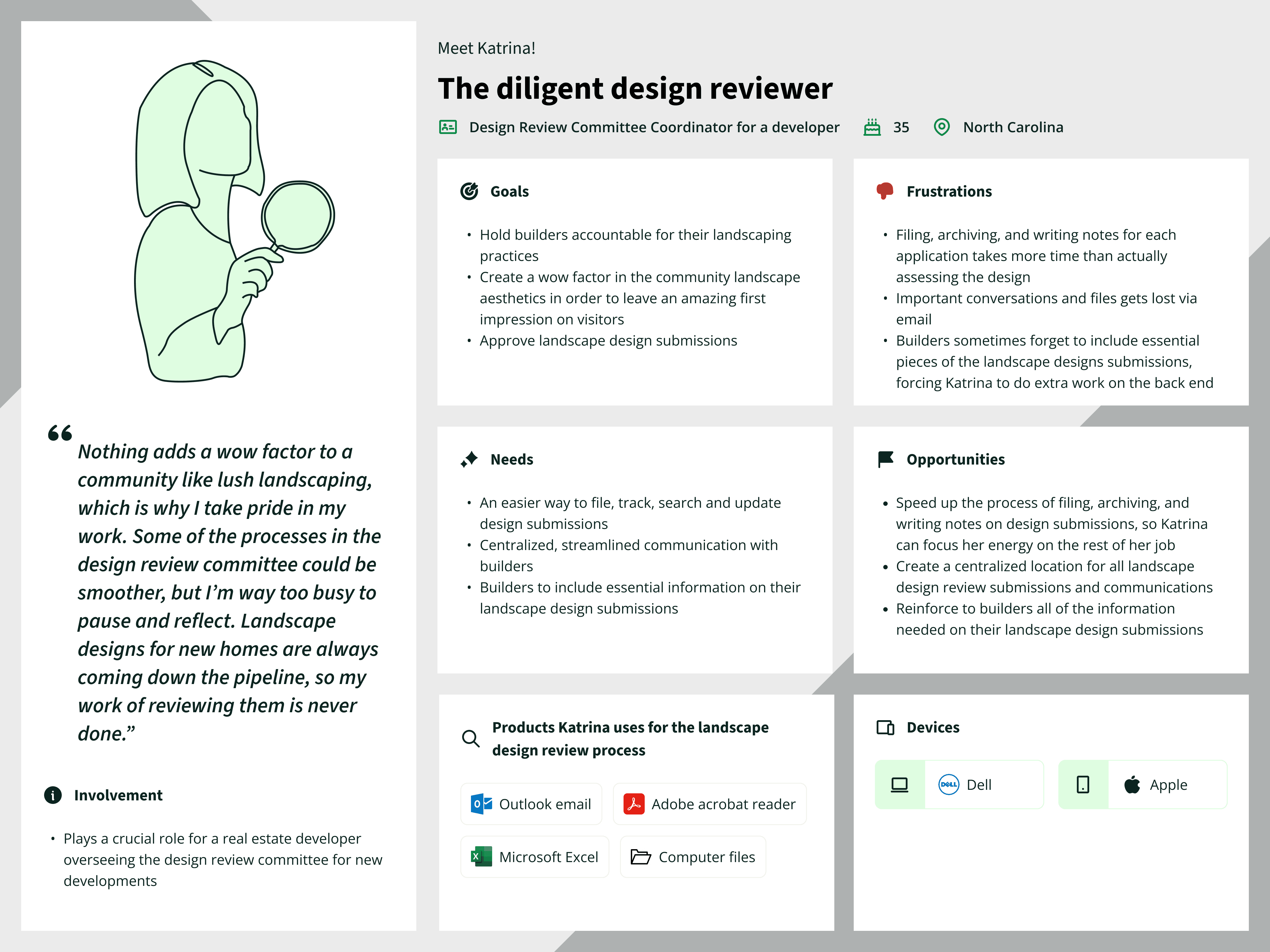

PRIMARY PERSONA

Meet the diligent design reviewer

Empathizing with the needs and frustrations of the research participants, I defined who would be using this product. The primary persona remained central in every design decision and allowed me to peek through the lens of the users. However, it was also important that the product accommodated the secondary persona, the busy builder.

Primary Persona

Secondary Persona

CURRENT-STATE CUSTOMER JOURNEY

Visualizing the existing experience of design reviewers to build empathy and prioritize ideas

Given the complexity of the landscape design review process involving various stages and participants, I focused on deconstructing it from the perspective of the design reviewer. The objective was to gain deeper insights into the pain points experienced by these users and identify areas of disconnect. This served as a foundation for prioritizing tasks and brainstorming solutions.

THE OPPORTUNITY

How might we create a complementary workflow that supports a design review committee to organize, track, search, and update landscape design submissions?

Ideation Phase

RAPID-BRAINSTORMING

The customer journey map highlighted areas of disconnect in the current design review journey. With these insights in mind, I jumped into ideation.

"Crazy 8" brainstorming sessions introduced time and creative constraints that resulted in the following impactful solution:

Create an automatic landscape design approval program utilizing AI photo recognition!

PRIORITIZATION

Narrowing down the scope with a MoSCoW matrix

Due to the time constraints associated with MVP development, it was crucial to focus on a manageable scope that could best address user needs and frustrations. The MoSCoW matrix highlighted that designing the landscape design submission overview page and the design review page would be the most impactful approach, demonstrating an innovative solution for inefficient design review workflows.

TASK FLOWS

Outlining the user journey and paving the way for usability testing

Having pinpointed areas of user disconnect through the customer journey map and refined the MVP scope using the MoSCoW matrix, I began to illustrate the user journey through task flows.

Task flows were created and prioritized based on their capacity to assist the primary persona with their goal of streamlining and consolidating the design review process into a central hub.

The selected task flow highlights an edge case within the design review process where the AI assistant couldn't auto-approve a landscape design submission. Consequently, the AI assistant identifies issues, which are then presented to the user via a sequence of checkpoints, allowing for swift resolution.

Design Phase

LOW TO MID-FIDELITY WIREFRAMES

Adding structure and function to the ideas

Working from low to mid-fidelity, I was able to explore and assess numerous designs for their effectiveness at streamlining and consolidating the design review process into a central hub.

Below is a recap of the persona's needs and how I consciously addressed them through design:

Persona's needs

Design opportunities

An easier way to file, track, search and update design submissions

Use AI assistant to automatically update stakeholders on review status

Utilize dashboard layout to provide users with easy access to essential information

Streamlined and well-documented communication with builders

Keep messages located within the submission overview page.

Establish a platform-email link

Essential information, such as lot size, included and called out in the submission to begin reviewing

Use AI photo recognition to pull essential information from property surveys and landscape designs

The mid-fidelity wireframes prompted valuable discussions with usability and visual design experts, validating the importance of reevaluating designs at the mid-fidelity stage, especially for products featuring intricate information hierarchies.

The following reflections emerged:

The prominent image was taking up valuable visual space. Is this necessary?

More critical elements, such as the progress card and messages/notifications, were not prioritized in the visual hierarchy.

Excessive use of icon-based action buttons might negatively affect accessibility and user learnability.

The wireframes contained significant information, potentially overwhelming users with cognitive load. Could the information be separated into different pages?

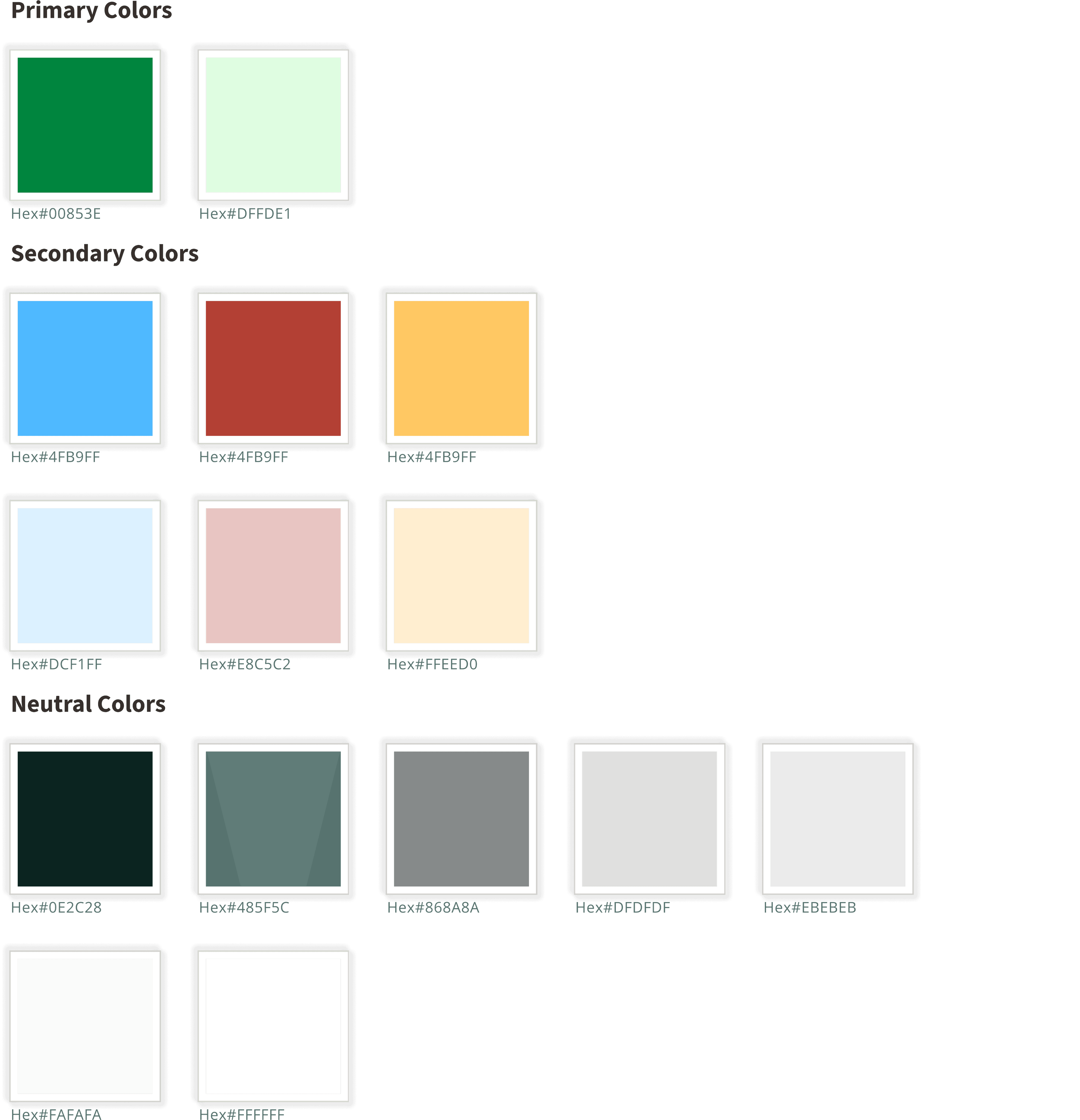

BRANDING

Neutral gradient for subtle hierarchy with splashes of color indicators

Color Selection

Typeface

Logo

Considering the information-heavy landscape design review workflow, it was essential to establish a clear visual hierarchy that doesn't overwhelm users. The neutral gradient forms a subtle foundation for this hierarchy, with the primary green aligning with the landscape theme, while the secondary colors (red, yellow, and blue) offer varying levels of user feedback that are easy to learn.

HIGH-FIDELITY WIREFRAMES

Refining the interface for a better user experience

Design discussions with usability and visual design experts at the mid-fidelity stage prompted adjustments when developing high-fidelity wireframes. In addition, I folded in the branding elements that enhance the user experience for the diligent design reviewer.

Testing & Iteration Phase

I conducted unmoderated usability testing on Maze with 17 participants.

Task tested:

Review and approve a landscape design submission.

Validating results — at a glance

Task completion rate:

94%

Average time to complete the task:

2 min 16 sec

Average rating on a scale of 1 to 10:

8.8

Likelihood of trusting the AI assistant on a scale of 1 to 10:

6.3

How do you feel about the integration of the AI assistant, Olive, to facilitate design reviews?

"I like it a lot… streamlines the process and makes it easier. Would love to know more about its accuracy, ability to pick up on minor errors, etc. I really like the interface of the review portal - great communal portal for contractor / designer / reviewer / developer."

KEY ADJUSTMENTS

Improve affordances on flagged items to make interaction more intuitive

Users conveyed frustration regarding the number of clicks necessary to review flagged items. Furthermore, users did not readily identify the "add a comment" button.

I introduced a comment input field positioned to the left of the resolve/escalate buttons, encouraging users to comment before making a decision. Additionally, I implemented an automatic transition to the next step in the task, with a brief pause to provide visual feedback confirming the action's success.

KEY ADJUSTMENTS

Strengthen feedback between the progress tracker and landscape plan

Participants expressed confusion and curiosity regarding the results tied to resolving flagged items, particularly regarding how these changes were reflected in the landscape design and where comments were stored.

To enhance the system's feedback, a visual indicator was added to signify the addition of comments. Furthermore, I enhanced the visual hierarchy of review components within the landscape design to reinforce the connection between the progress tracker and the landscape plan.

Results & Next Steps

THE PROBLEM

Outdated design review technologies lead to inefficient workflows, impacting construction timelines, coordination, and ultimately, home sales within the community development

The landscape design review process primarily relies on email, often resulting in inefficiencies due to the lack of centralized information. These inefficiencies not only burden builders and design reviewers with information disarray and communication issues, but also have downstream impacts on field-work coordination, construction timelines, and home sales.

THE SOLUTION

Streamline the process by integrating an AI assistant into a centralized workflow platform

Introducing LandSwift, a B2B SaaS workflow platform, employing an AI assistant named Olive to optimize the landscape design review process. This AI assistant, Olive, processes new landscape design submissions, sends reminders and status updates to all stakeholders, and automatically approves designs using AI photo recognition. In edge cases where approval isn't possible, the AI assistant will identify issues before passing the task to a design reviewer.

NEXT STEPS

Test the accuracy of AI photo recognition in landscape design reviews

While AI photo recognition capabilities are advancing swiftly, it's crucial to assess their accuracy, especially when scanning property surveys and landscape plans with varying quality and formats. This understanding of AI's limitations will inform design choices that align with current technology capabilities, with flexibility for adjustments as AI evolves and learns the needs of the user.

Develop a design review overview page, messaging page, and landscape design submission form

Refine content for the submission overview page

Participants directly involved with design review processes indicated a need for additional details on the overview page, including data like utility easement locations and sizes. Further industry-wide surveys and usability testing are necessary to refine the information displayed, allowing for potential adaptability across various community developments.

A/B test and monitor user activity for data-backed decision-making

A/B testing is necessary to make data-backed decisions for design variations, such as the usability of the design review accordion. Additionally, user activity in the platform must be analyzed by assessing heatmaps, conversion funnels, and surveys in order to guide the next steps and make the product more efficient.

Jump to